Noninvasive Glucose Monitoring Using Polarized Light

We propose a compact noninvasive glucose monitoring system using polarized light, where a user simply needs to place her palm on the device for measuring her current glucose concentration level. The primary innovation of our system is the ability to minimize light scattering from the skin and extract weak changes in light polarization to estimate glucose concentration, all using low-cost hardware. Our system exploits multiple wavelengths and light intensity levels to mitigate the effect of user diversity and confounding factors (e.g., collagen and elastin in the dermis). It then infers glucose concentration using a generic learning model, thus no additional calibration is needed. We design and fabricate a compact (17 cm x 10 cm x 5 cm) and low-cost (i.e., <$250) prototype using off-the-shelf hardware. We evaluate our system with 41 diabetic patients and 9 healthy participants. In comparison to a continuous glucose monitor approved by U.S. Food and Drug Administration (FDA), 89% of our results are within zone A (clinically accurate) of the Clarke Error Grid. The absolute relative difference (ARD) is 10%. The rr and pp values of the Pearson correlation coefficients between our predicted glucose concentration and reference glucose concentration are 0.91 and 1.6×10^{-143}, respectively. These errors are comparable with FDA-approved glucose sensors, which achieve about 90% clinical accuracy with a 10% mean ARD. This work will present in SenSys'20. [PDF]

Battery-Free Eye Tracker on Glasses

We presents a battery-free wearable eye tracker that tracks both the 2D position and diameter of a pupil based on its light absorption property. With a few near-infrared (NIR) lights and photodiodes around the eye, NIR lights sequentially illuminate the eye from various directions while photodiodes sense spatial patterns of reflected light, which are used to infer pupil’s position and diameter on the fly via a lightweight inference algorithm. The system also exploits characteristics of different eye movement stages and adjusts its sensing and computation accordingly for further energy savings. A prototype is built with off-the-shelf hardware components and integrated into a regular pair of glasses. This work presented in MobiCom'18. [PDF]

Experiments with 22 participants show that the system achieves 0.8-mm mean error in tracking pupil position (2.3 mm at the 95th percentile) and 0.3-mm mean error in tracking pupil diameter (0.9 mm at the 95th percentile) at 120-Hz output frame rate, consuming 395μW mean power supplied by two small, thin solar cells on glasses side arms.

Self-Powered Gesture Recognition with Ambient Light

We present a self-powered module for gesture recognition that utilizes small, low-cost photodiodes for both energy harvesting and gesture sensing. Operating in the photovoltaic mode, photodiodes harvest energy from ambient light. In the meantime, the instantaneously harvested power from individual photodiodes is monitored and exploited as a clue for sensing finger gestures in proximity. Harvested power from all photodiodes are aggregated to drive the whole gesture-recognition module including a micro-controller running the recognition algorithm. We design robust, lightweight algorithm to recognize finger gestures in the presence of ambient light fluctuations. We fabricate two prototypes to facilitate user’s interaction with smart glasses and smart watches. This work presented in UIST'18. [PDF]

Results show 99.7%/98.3% overall precision/recall in recognizing five gestures on glasses and 99.2%/97.5% precision/recall in recognizing seven gestures on the watch. The system consumes 34.6 µW/74.3 µW for the glasses/watch and thus can be powered by the energy harvested from ambient light.

LiGaze

Ultra-Low Power Gaze Tracking for Virtual Reality

We present LiGaze, a low-cost, low-power approach to gaze tracking tailored to VR. It relies on a few low-cost photodiodes, eliminating the need for cameras and active infrared emitters. Reusing light emitted from the VR screen, LiGaze leverages photodiodes around a VR lens to measure reflected screen light in different directions. It then infers gaze direction by exploiting pupil’s light absorption property. The core of LiGaze is to deal with screen light dynamics and extract changes in reflected light related to pupil movement. LiGaze infers a 3D gaze vector on the fly using a lightweight regression algorithm. We design and fabricate a LiGaze prototype using off-the-shelf photodiodes. LiGaze’s simplicity and ultra-low power make it applicable in a wide range of VR headsets to better unleash VR’s potential. This work presented in SenSys'17 and won Best Paper Nominee. [PDF][PROJECT WEBSITE]

LiGaze achieves 6.3° and 10.1° mean within-user and cross-user accuracy. Its sensing and computation consume 791µW in total and thus can be completely powered by a credit-card sized solar cell harvesting energy from indoor lighting.

Aili

Reconstructing Hand Poses Using Visible Light

Free-hand gestural input is essential for emerging user interactions. We present Aili, a table lamp reconstructing a 3D hand skeleton in real time, requiring neither cameras nor on-body sensing devices. Aili consists of an LED panel in a lampshade and a few low-cost photodiodes embedded in the lamp base. To reconstruct a hand skeleton, Aili combines 2D binary blockage maps from vantage points of dierent photodiodes, which describe whether a hand blocks light rays from individual LEDs to all photodiodes. Empowering a table lamp with sensing capability, Aili can be seamlessly integrated into the existing environment. Relying on such low-level cues, Aili entails lightweight computation and is inherently privacy-preserving. We build and evaluate an Aili prototype. We also conduct user studies to examine the privacy issues of Leap Motion and solicit feedback on Aili’s privacy protection. We conclude by demonstrating various interaction applications Aili enables. This work presented in UbiComp'17. [PDF][PROJECT WEBSITE]

Aili achieves 10.2° mean angular deviation and 2.5-mm mean translation deviation in comparison to Leap Motion.

StarLight

Human Sensing Using VLC

We present StarLight, an infrastructure-based sensing system that reuses light emitted from ceiling LED panels to reconstruct fine-grained user skeleton postures continuously in real time. It relies on only a few (e.g., 20) photodiodes placed at optimized locations to passively capture low-level visual clues (light blockage information), with neither cameras capturing sensitive images, nor on-body devices, nor electromagnetic interference. It then aggregates the blockage information of a large number of light rays from LED panels and identifies best-fit 3D skeleton postures. StarLight greatly advances the prior light-based sensing design by dramatically reducing the number of intrusive sensors, overcoming furniture blockage, and supporting user mobility. We build and deploy StarLight in a 3.6 m x 4.8 m office room, with customized 20 LED panels and 20 photodiodes. This work presented at MobiSys'16 and won SIGMOBILE Research Highlights Award. [PDF][PROJECT WEBSITE]

StarLight achieves 13.6° mean angular error for five body joints and reconstructs a mobile skeleton at 40 FPS frame rate.

LiSense

Human Sensing Using VLC

LiSense is the first-of-kind system that enables both data communication and fine-grained, real-time human skeleton reconstruction using Visible Light Communication. LiSense uses shadows created by the human body from blocked light and reconstructs 3D human skeleton postures in real time. Multiple lights on the ceiling lead to diminished and complex shadow patterns on the floor. We design light beacons to separate light rays from different light sources and recover the shadow pattern cast by each individual light. Then, we design an efficient inference algorithm to reconstruct user postures using 2D shadows with a limited resolution collected by photodiodes. This work was presented in MobiCom'15 won the Best Video Award. [PDF][PROJECT WEBSITE]

LiSense reconstructs the 3D user skeleton at 60 Hz in real time with 10° mean angular error for five body joints.

WiScan

Low-Power Pervasive Wi-Fi Connectivity

Pervasive Wi-Fi connectivity is attractive for users in places not covered by cellular services (e.g., when traveling abroad). However, the power drain of frequent Wi-Fi scans undermines the device's battery life, preventing users from staying always connected and fetching synced emails and instant message notifications. We study the energy overhead of scan and roaming in detail and refer to it as the scan tax problem. Our findings show that the main processor is the primary culprit of the energy overhead. We design and build WiScan to fully exploit the gain of scan offloading. This work was presented in UbiComp'15. [PDF]

WiScan achieves 90%+ of the maximal connectivity, while saving 50-62% energy for seeking connectivity.

HiLight

Real-Time Screen-Camera Communication Behind Any Scene

HiLight is a new form of real-time screen-camera communication without showing any coded images (e.g., barcodes) for off-the-shelf smart devices. HiLight encodes data into pixel translucency change atop any screen content, so that camera-equipped devices can fetch the data by turning their cameras to the screen. HiLight leverages the alpha channel, a well-known concept in computer graphics, to encode bits into the pixel translucency change. By removing the need to directly modify pixel RGB values, HiLight overcomes the key bottleneck of existing designs and enables real-time unobtrusive communication while supporting any screen content. This work was presented in MobiSys'15 and won the Best Demo Award. [PDF][CODE][PROJECT WEBSITE]

We design and build HiLight using off-the-shelf smart devices, the first system that realizes on-demand data transmissions in real time unobtrusively atop arbitrary screen content.

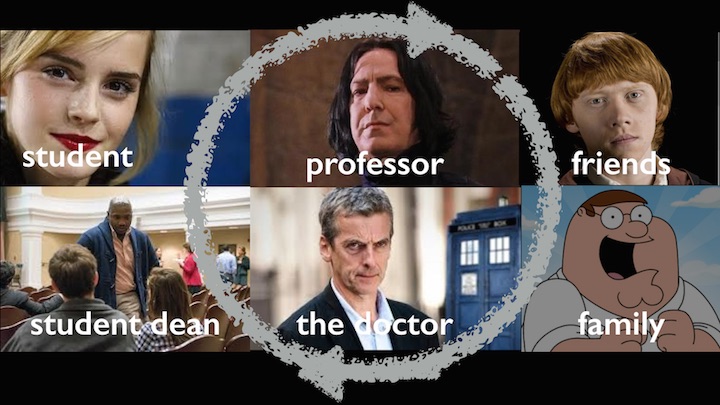

StudentLife

Assessing Mental Health, Academic Performance and Behavioral Trends of College Students using Smartphones

The StudentLife continuous sensing app assesses the day-to-day and week-by-week impact of workload on stress, sleep, activity, mood, sociability, mental well-being and academic performance of a single class of 48 students across a 10-week term at Dartmouth College using Android phones. This work was presented in UbiComp'14 and won the Best Paper Nominee Award. [PDF][PROJECT WEBSITE]

The StudentLife study shows a number of significant correlations between the automatic objective sensor data from smartphones and mental health and educational outcomes of the student body.